How to Use ChatGPT API? A Beginner's Friendly Tutorial

The ChatGPT API became accessible on March 1, 2023. It allows making requests like those done through the ChatGPT website but in a programmatic manner.

💡 Want to learn more about ChatGPT? Then you’ll love my comprehensive guide on how to use ChatGPT.

To begin using the ChatGPT API, follow these steps:

-

- Obtain an API key: To use the ChatGPT API, you need to sign up to get an API key.

-

- Choose a programming language: Select a programming language to interact with OpenAI’s API.

-

- Install what you need: If you decide to use a project to interact with the ChatGPT API.

-

- Code your first request to ChatGPT API: Learn how to write code to interact with the API.

-

- Use ChatGPT API response: ChatGPT API responds with something—how will you use it?

Let’s start learning how to use the ChatGPT API together with this tutorial.

Looking for information on the new OpenAI Assistants API? Then you might be intersted in this article.

What is an API?

API stands for Application Programming Interface. It’s a series of protocols and tools used to build other applications by communicating with what the API exposes.

APIs define how one can interact, how many requests can be made, the format of the request and responses, and much more.

Developers can “plug into” an API to interact with another application without fully understanding how the application works under the hood. They just need to know the various “endpoints” of the API, what they can connect to like URLs.

With ChatGPT, it means we can connect to an access point that provides more or fewer features than the ChatGPT website. We can create complete applications or add features to an existing application using this API, much like using a cloud service.

How much does ChatGPT API cost?

The cost of using the ChatGPT API is around one cent in US dollars (USD) for 5000 tokens. OpenAI offers $5 worth of free credits for 30 days when you open a new account. That’s more than enough to make all the requests you want for a month.

After that, you’ll need to enter your billing information on the OpenAI website.

How to get your ChatGPT API key?

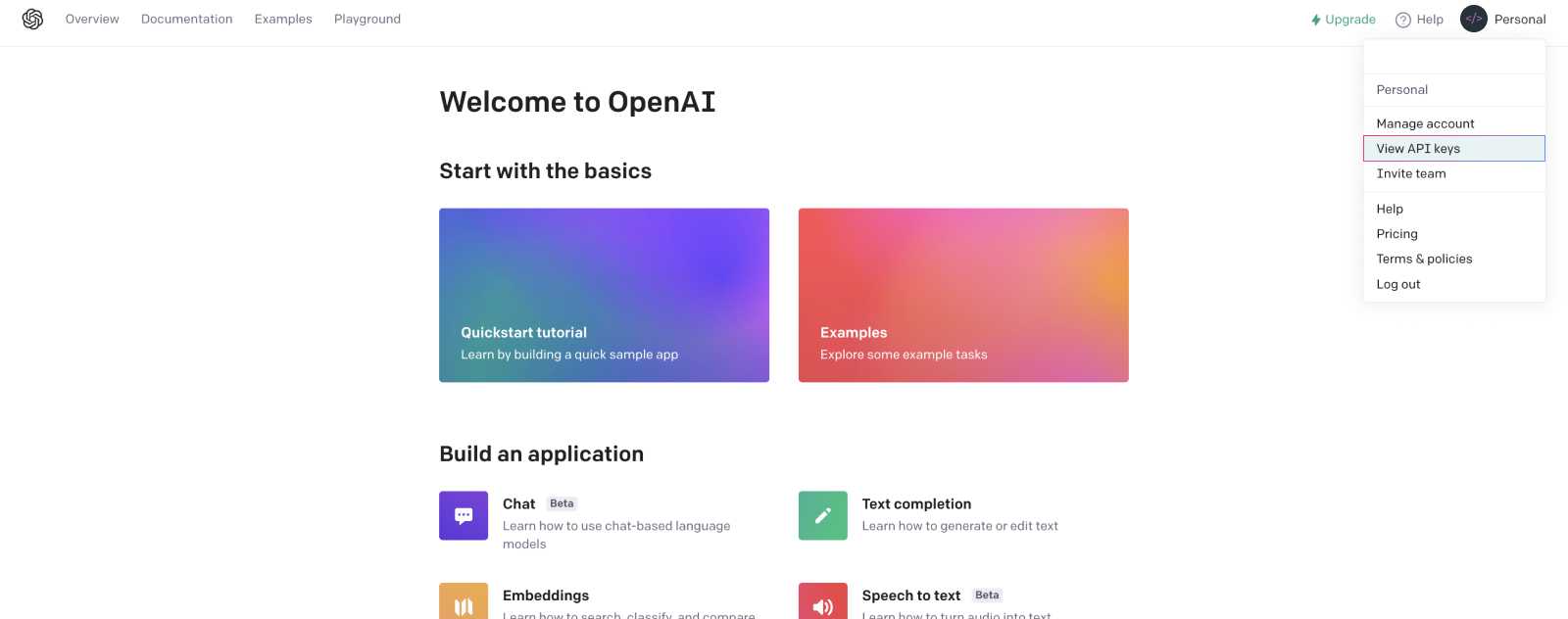

To get your OpenAI API Key and access the ChatGPT API, go to OpenAI’s official website.

Once logged in, navigate to the “View API Keys” tab.

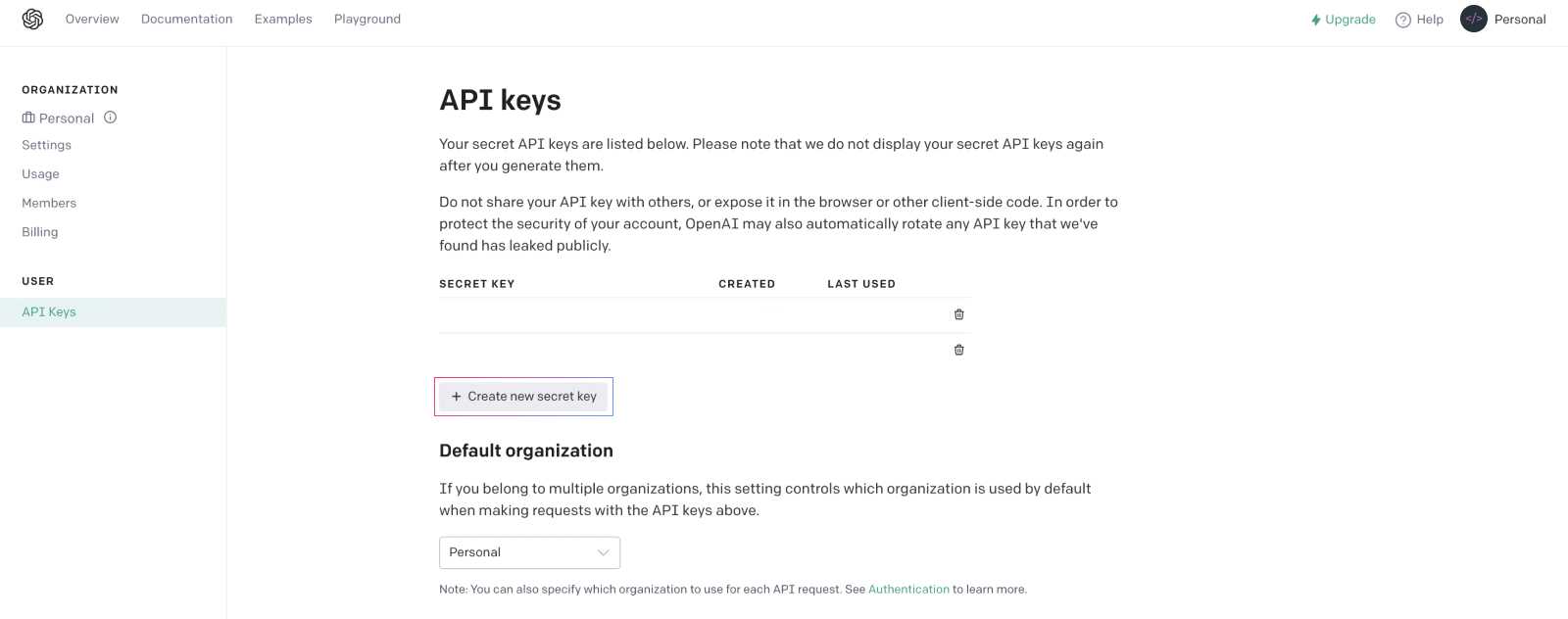

On the API keys page, press the “Create new secret key” button, and you’ll get your API key! 🗝️

This key will allow you to interact with the ChatGPT API and other OpenAI APIs in your various projects.

⚠️ Do not share your key publicly; keep it secure by storing it as an environment variable. An API key grants access to your OpenAI account; someone with malicious intent could use this key, and you’ll be responsible for the usage costs.

Which programming language for ChatGPT API?

The access points of the ChatGPT API do not require the use of a specific programming language.

You can make your requests with cURL or in any programming language.

Prerequisites for interacting with ChatGPT API

Although you don’t need to install anything to interact with ChatGPT endpoints, OpenAI offers two official libraries in Python and JavaScript.

You can install openai for Python using the pip command:

pip install openaiAnd for Node.js, there’s also a library available that can be installed with npm:

npm install openaiCoding a request to ChatGPT API

To code a request to the ChatGPT API, you’ll need:

YOUR_OPENAI_API_KEY, which is the API key retrieved earlier in this tutorial.YOUR_CHATGPT_REQUEST, which is the prompt you’d normally write on the ChatGPT tool’s website.

Example code for interacting with the ChatGPT API using curl:

curl https://api.openai.com/v1/chat/completions \

-H 'Content-Type: application/json' \

-H 'Authorization: Bearer YOUR_OPENAI_API_KEY' \

-d '{

"model": "gpt-3.5-turbo",

"messages": [{"role": "user", "content": "YOUR_CHATGPT_REQUEST"}]

}'Example code for interacting with the ChatGPT API in Python:

import os

import openai

openai.api_key = "YOUR_OPENAI_API_KEY"

completion = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[

{"role": "

user", "content": "YOUR_CHATGPT_REQUEST"}

]

)

print(completion.choices[0].message)Example code for interacting with the ChatGPT API in JavaScript:

const { Configuration, OpenAIApi } = require("openai")

const configuration = new Configuration({

apiKey: YOUR_OPENAI_API_KEY, // Ideally, you'd have placed your API key in an environment variable

})

const openai = new OpenAIApi(configuration)

const completion = await openai.createChatCompletion({

model: "gpt-3.5-turbo",

messages: [{ role: "user", content: "YOUR_CHATGPT_REQUEST" }],

})

console.log(completion.data.choices[0].message)ChatGPT works with the GPT-3.5 model. That’s why we provide the "gpt-3.5-turbo" model in our different requests.

️ ⚠️ If you get a response saying you’ve reached the maximum usage with the API, you can create a new account to enjoy free credits. You can also decide to link a credit card; OpenAI’s API costs very little.

Using the ChatGPT API response

If your request went well, you’ll get a response from the ChatGPT API similar to a response you’d get when using the ChatGPT website.

With curl, you’ll see the response in choices.message.content:

{

...

"choices": [{

...

"message": {

...

"content": "RESPONSE_TO_YOUR_REQUEST",

},

...

}],

...

}

In Python, you can access it with:

completion.choices[0].message.contentAnd in JavaScript, here’s where you’ll find your response:

completion.data.choices[0].message.contentHow to keep conversation history with ChatGPT when using the API?

As we’ve seen, the ChatGPT API is just a single access point. Unlike the ChatGPT website, which keeps your prompts in memory for a real conversation with the AI, the API won’t retain your previous requests.

Therefore, it’s up to us to manage the conversation context using a different role in our requests: that of an assistant.

The more observant among you may have noticed that, in addition to the content (content), we pass a role in the message of our requests:

messages=[

{"role": "user", "content": "The prompt we send to the ChatGPT API"}

]The content of this role can be:

userfor your prompts that you send to the ChatGPT API with instructions to provide to the assistant. These messages can be generated by a user or hardcoded by a developer in an application.assistantis the artificial intelligence, the role of messages with your responses. It’s crucial to provide these messages coming from ChatGPT if you want to have a conversation.systemdefines the behavior of the assistant. It’s a function rarely used by the current model (gpt-3.5-turbo-0301) but will become increasingly important with future versions of the AI model.

We’ll often have a structure that begins by defining the AI’s behavior and then a conversation between the user and the AI.

systemmessageusermessageassistantmessageusermessageassistantmessage- …

As it’s up to us to manage the conversation history with ChatGPT, we’ll create an array or a list and keep each message in it.

Let’s see a complete example in Python:

import os

import openai

openai.api_key = os.getenv("...")

# Declare a list to keep the history of all our messages with ChatGPT

messages = []

# Optional: defines the behavior the assistant should adopt

messages.append({"role": "system", "content": "You are a Python developer"})

# A common question we might ask ChatGPT

messages.append({"role": "user", "content": "Explain how to do a ternary operation in Python"})

# I create my request by choosing the model and sending my messages

completion = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=messages

)

# The response provided by ChatGPT

response_chatgpt = completion.choices[0].message.content

print(response_chatgpt)ChatGPT explains to us that the if-else conditional construction does not exist in Python and is different from other languages.

Let’s continue our conversation by adding the API response to our messages list:

# Include the response in the history of messages

messages.append({"role": "assistant", "content": response_chatgpt})

# I ask a new question

messages.append({"role": "user", "content": "So the if-else conditional construction does not exist?"})

completion = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=messages

)

response_chatgpt = completion.choices[0].message.content

print(response_chatgpt)By asking my second question “So the if-else conditional construction does not exist?”, ChatGPT understands that I’m talking about ternary conditions in Python and not just if-else conditions in general.

I would have received a completely different response to this question if I had asked it without providing the context of the message history to the ChatGPT API.

What is the maximum request size with the ChatGPT API?

We’ve seen that the ChatGPT API costs 1 cent for about 5000 tokens. You can see in the response object you get from the API that you have a "usage" showing the total number of tokens "total_tokens" used in the API call.

{

...

"usage": {

"completion_tokens": ...,

"prompt_tokens": ...,

"total_tokens": ...,

}

}The longer your conversation history array or list, the larger this total token count will grow, and know that this number cannot exceed 4096.

If you have a lengthy conversation with the ChatGPT API, it might be a good idea to summarize your messages.

You can do this by asking the ChatGPT API itself! 🦾

Going Further with ChatGPT API!

And there you have it, that’s all you need to know to interact with the ChatGPT API endpoint.

Now that you know how to use it, it’s up to you to create scripts to automate certain tasks or even build an application like a SaaS that harnesses the power of ChatGPT.

The sky’s the limit here; there’s so much yet to be invented in terms of products and services that utilize artificial intelligence!

Some links in this article are affiliated, with no additional cost to you in case of a click. Thank you for your support!

By the way, if you want to learn more about OpenAI APIs, I highly recommend the excellent course in English "Mastering OpenAI Python APIs: Unleash the Power of GPT4" on Udemy!Hey, I'm Thomas 👋 Here, you'll find articles about tech, and what I think about the world. I've been coding for 20+ years, built many projects including Startups. Check my About/Start here page to know more :).